Why We Need to Rethink LLM Evaluation Right Now

For years, evaluating large language models felt simple: throw a prompt at the model, score the answer, publish the leaderboard. That era is officially over.

Modern LLMs no longer behave like “text in → text out” systems. They plan. They take actions. They call tools. They collaborate with other agents. They correct themselves. In other words, they’re turning into autonomous problem-solvers, not autocomplete engines.

Yet… our benchmarks are still frozen in the past.

If we want to understand what today’s agentic AI systems can actually do, we need to stop measuring static snapshots and start measuring long-horizon behavior, adaptability, coordination, and resilience. Because the next breakthroughs in AI won’t come from bigger models, they’ll come from smarter systems.

Let’s explore what the future of benchmarking really looks like.

From Static Models to Agentic Intelligence

LLMs used to be passengers, now they’re drivers.

A modern agentic system can:

- Break a problem into steps

- Retrieve missing information

- Use tools or APIs

- Collaborate with other specialized agents

- Reflect and retry

- Maintain temporary reasoning state

This shift transforms evaluation. A single prompt cannot capture the abilities of a system that may perform 50+ decisions across a workflow. Imagine evaluating a human by asking only one question. That’s what we’re doing with LLMs today.

The Limits of Traditional Benchmarks

Classical benchmarks like MMLU, TruthfulQA, ARC, or GSM8K were groundbreaking, but they evaluate only one frame of a full movie.

Here’s what they miss:

- No temporal reasoning

Can the model stay coherent across dozens of steps without drifting? Static benchmarks can’t tell.

- No action-based evaluation

Agents need to execute plans and interact with environments. Single-turn tests ignore tool use, API calls, re-planning, and error recovery.

- No robustness measurement

If an agent hits the wrong path mid-task, does it give up or navigate back? Old benchmarks never ask.

- No multi-agent coordination

Many systems now rely on teams of specialized agents. Inter-agent communication? Not measured. We’re benchmarking calculators while building autonomous assistants.

The Rise of Agentic Benchmarks

Thankfully, a new wave of benchmarks is emerging.

Frameworks like AgentBench, AgentBoard, and AgenticBench evaluate LLMs inside dynamic environments where actions matter as much as answers.

Instead of static questions, these benchmarks test:

- Multi-step problem solving

- Planning tasks (e.g., navigation, scheduling, research workflows)

- Tool-use correctness

- Adaptability in changing environments

- Long-horizon consistency

- Multi-agent collaboration

This is where real intelligence shows up. But even these benchmarks are only the beginning.

What the Future of Benchmarking Should Measure

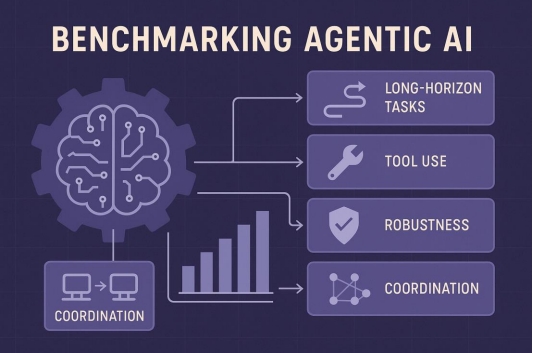

Agentic AI introduces new dimensions that must become core metrics.

Here’s a blueprint for next-generation evaluation:

- Long-Horizon Stability

Does the agent maintain reasoning quality across 10, 20, or 50 steps without collapsing?

- Tool-Use Reliability

It’s not enough to call a tool – we must measure:

- Correctness

- Error detection

- Error recovery

- Safety constraints

- Efficient tool selection

- Memory & Context Management

Can the agent store, reference, update, and forget information appropriately?

Memory isn’t a luxury, but it’s a necessity.

- Planning & Re-planning

Real intelligence is measured not by perfect plans but by adaptive plans.

- Inter-Agent Communication Quality

When multiple agents collaborate, we must evaluate:

- Communication clarity

- Agreement formation

- Conflict resolution

- Redundancy avoidance

- RAG-Enhanced Behavior

RAG systems must be tested not just on retrieval accuracy, but on:

- Citation consistency

- Hallucination reduction

- Relevance filtering

- Retrieval-reasoning integration

- Self-Monitoring & Self-Correction

Can an agent notice it’s wrong? Can it repair itself without human prompts? These dimensions paint a richer, more realistic picture of intelligence.

The Missing Ingredient: Observability

Agentic systems are messy. They involve dozens of steps, internal states, messages, tool calls, memory updates, and retries. Without proper observability, benchmarking becomes guesswork.

We need:

- Workflow traces

- Interaction graphs

- Step-level logs

- Performance dashboards

- Error-path visualizations

- Bench-level analytic

- Agent-to-agent conversation auditing

Observability isn’t an add-on; it’s the foundation. If we can’t see inside the agent, we can’t evaluate the agent.

Conclusion: Benchmarking Must Evolve With AI

We are moving from LLMs that answer questions to agents that solve problems.

From single-turn prompts to multi-step plans.

From isolated models to cooperative systems.

Our evaluation methods must evolve, too.

The future of benchmarking is:

- Dynamic

- Long-horizon

- Action-based

- Collaborative

- Observable

- Robust

- Realistic

If we measure the right things, we can push AI toward the right future. And that future is being built right now.