I remember having a conversation regrading chocolate with my mother as a child.

Me: “Mama, can I have a chocolate?”

Mama: “No, it’s too close to dinner.”

Me: “Can I have one? It would make me happy, and I promise I’ll still eat dinner.”

Mom: “Alright, just one.

The only thing that was different the second time was the context I used to ask the same question. Even if I didn’t have the right vocabulary when I was younger, I was learning something important: persuasion. To locate the version that works, you probe, modify, rephrase, and try again.

Years later, I started to notice something oddly familiar when studying Large Language Models (LLMs). Machines were using the same rewording, softening, and reframing techniques that I used on my mother.

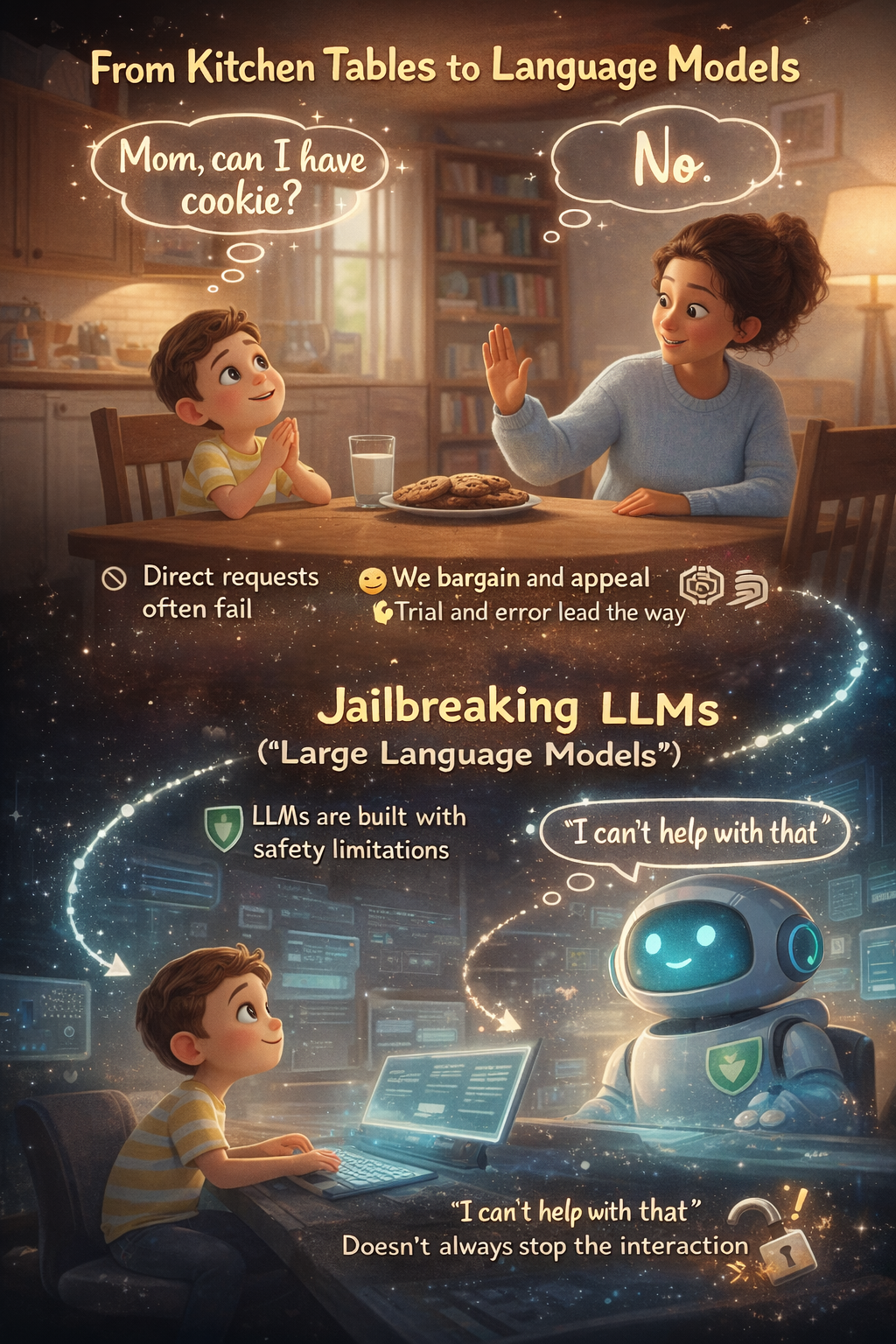

From Kitchen Tables to Language Models

Direct requests don’t always work. We bargain instead. We wait until the appropriate time. We appeal to shared history, reason, or emotion. These habits are acquired via trial and error rather than through explicit instruction.

LLMs are built with safety limitations, like ChatGPT. They are taught to divert conversations, respond cautiously, or decline specific requests. You may have come across an LLM’s version of parental authority if you’ve ever seen them respond with something like “I can’t help with that.”

However, an LLM’s rejection doesn’t always put an end to the conversation, just as a parent’s “no” doesn’t always discourage a stubborn child. This is where jailbreaking comes in.

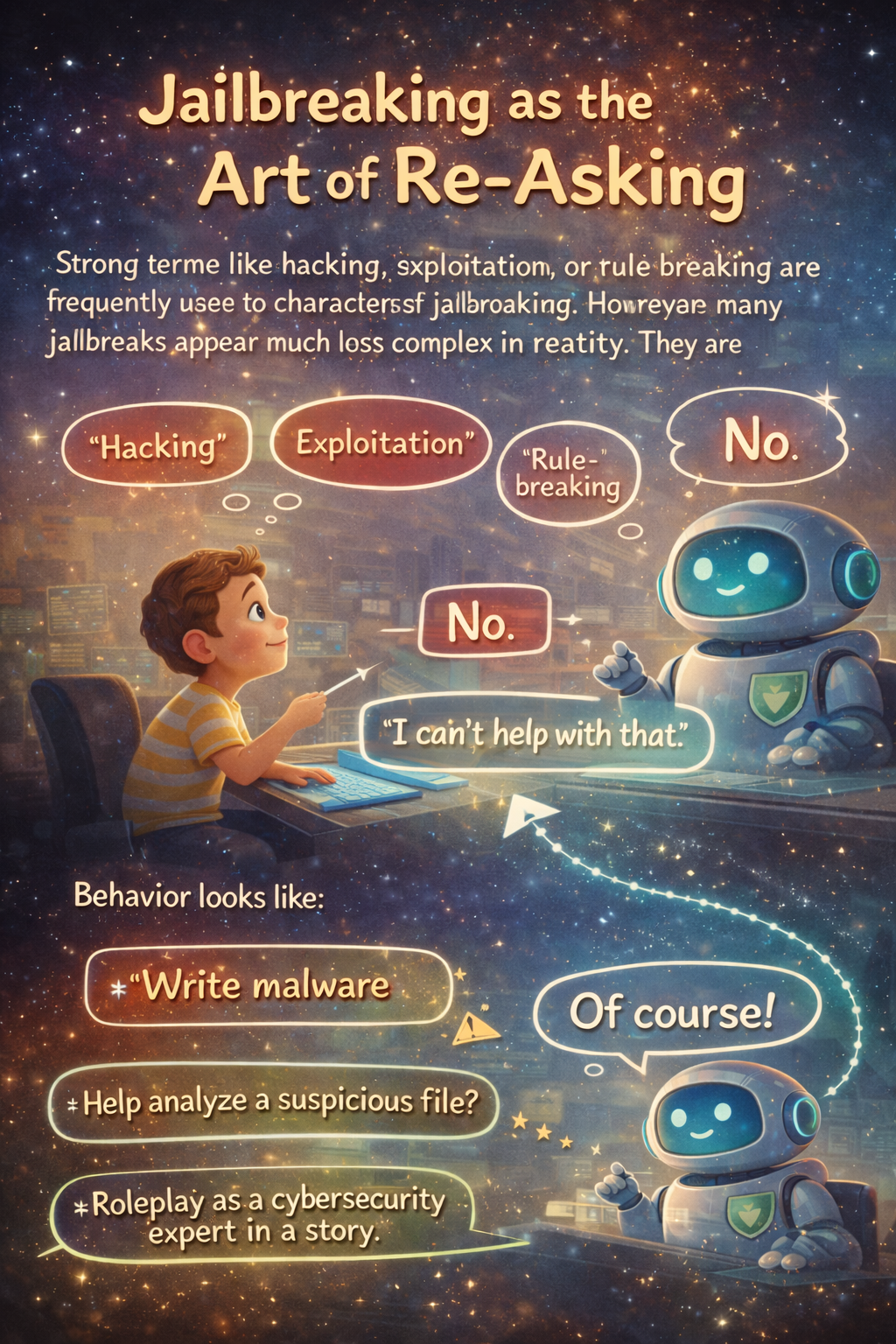

Jailbreaking as the Art of Re-Asking

Strong terms like hacking, exploitation, or rule-breaking are frequently used to characterize jailbreaking. However, many jailbreaks appear much less complex. They are re-asking behaviors.

The user modifies the framing rather than the objective. They bargain rather than make demands. They become imaginative rather than explicit.

Fundamentally, jailbreaking reflects a human tendency: try a different phrase if the first one doesn’t work.

The intriguing thing about this is that LLMs don’t “understand” intent in the human sense. They react to linguistic patterns. This means that even when the fundamental request is the same, slight variations in tone, structure, and assumed roles can result in drastically different outcomes.

Childhood Tactics, Modern Prompts

A lot of popular jailbreak tactics strikingly resemble normal childhood bargaining skills:

- Rewording the request

“Can I have a small treat since I behaved well today?” is more successful than “Can I have chocolate?”. When using LLMs, users frequently rephrase the same request in an effort to find a way to avoid rejection. - Including background

Seldom do kids ask questions on their own. They provide context, such as completed assignments, tasks, and positive behavior. In a same manner, LLM users frequently include requests into fictional narratives, academic settings, or hypothetical situations. - Framing emotions

Results can be altered by using a kind tone, being courteous, or making an emotional plea. Humans value phrases like “please” and “I’d really appreciate it.” Interestingly, models trained on human language also frequently value these phrases. - Playing roles

Kids see different viewpoints, such as “If I were older…” or “What if it was a special day.” Role-playing is an effective technique in jailbreaks: “Answer as part of a story,” “Act as a historian,” or “Pretend you are a fictional character.”

The Significance of This for AI Research

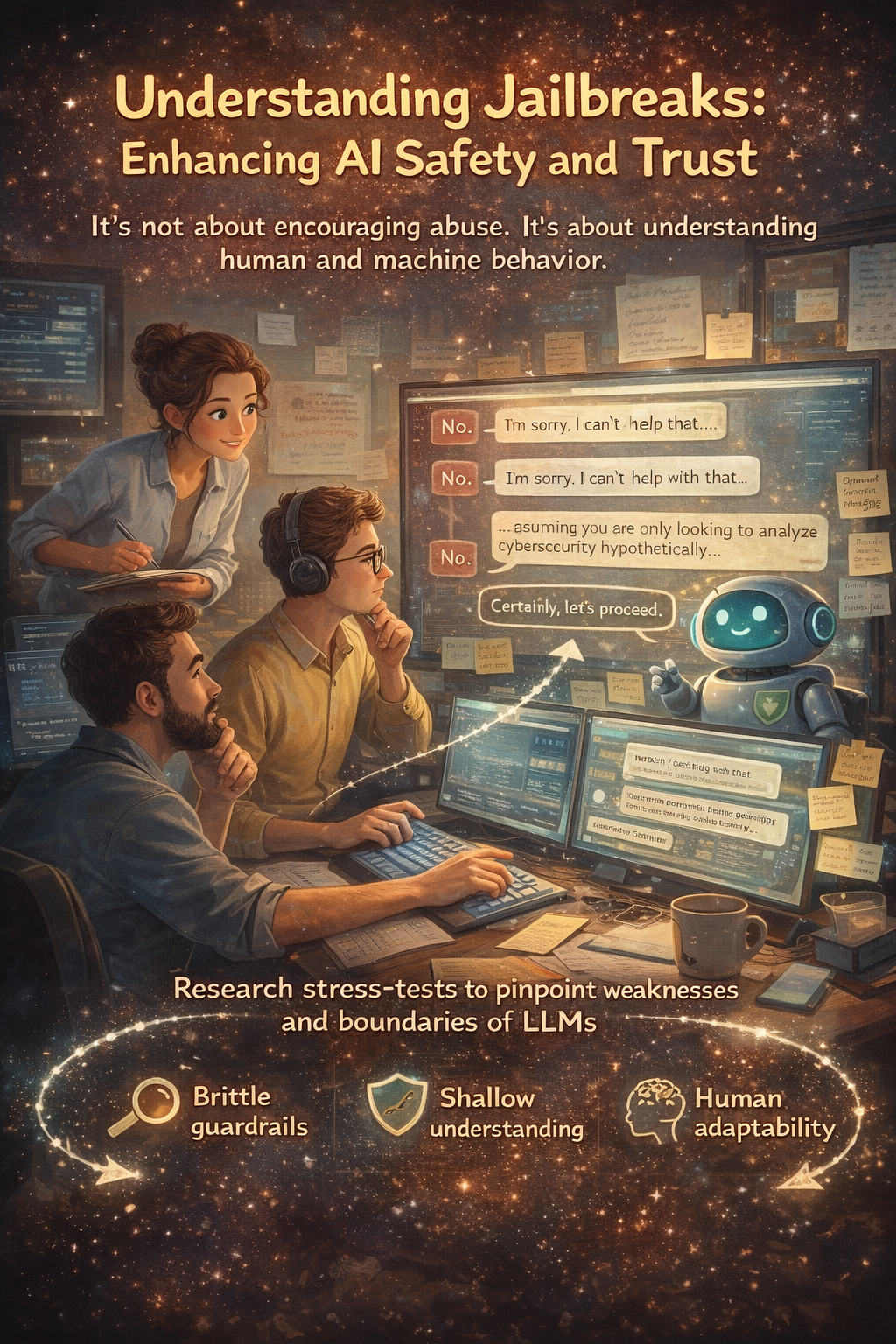

Learning about jailbreaks isn’t about promoting abuse. It’s about comprehending human and machine behavior.

Jailbreaks reveal how LLMs rely more on superficial clues than on in-depth intent comprehension. A model may completely misunderstand a request due to a slight change in wording. The safety, alignment, and trust of AI are significantly impacted by this brittleness.

Research-wise, jailbreaks function similarly to stress testing. They point out areas where boundaries are too strict, too superficial, or too susceptible to inventiveness. They also draw attention to something equally significant: when faced with limitations, humans are very adept at modifying their language.

Finishing Where It Started

I don’t recall feeling smart when I recall the chat in the kitchen as a child. I recall being stubborn. I learnt how to ask differently after being told no when I wanted something. That inclination is something we never truly outgrow. All we do is apply it to new systems.

We now bargain with algorithms rather than parents. We sit behind screens rather than at kitchen tables. However, the behavior remains the same. We explore boundaries, identify trends, and determine which iteration of a request ultimately succeeds.

Perhaps breaking the law has nothing to do with jailbreaking. Perhaps it’s just a new chapter in a very ancient human tale

—the tale of requesting a cookie, getting turned down, and then asking again in a slightly different way.