Summary: Generative AI (GenAI) is everywhere—from product planning to prototyping. But indiscriminate use can create ethical pitfalls, privacy issues, and poor decisions. In this post, we show how to integrate GenAI responsibly into each phase of UCD (ISO 9241-210), with practical opportunities and essential safeguards.

Why this matters now

- Productivity: teams already use GenAI to write, analyze data, and prototype.

- Real risk: without method, you get hallucinations, bias, and data exposure.

- Fear of replacement: the right mindset is AI as a copilot—augmenting people, not replacing them.

UCD in 10 seconds (ISO 9241-210): an iterative process with four phases—(1) understand the context of use, (2) specify requirements, (3) create solutions, and (4) evaluate with users—refining continuously until needs are met.

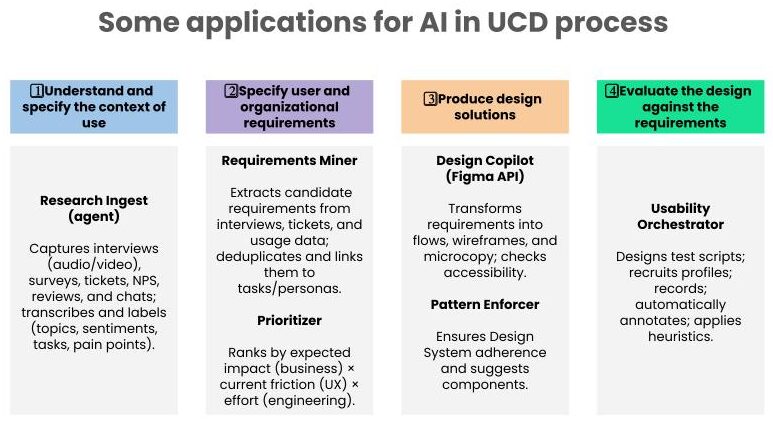

How to apply GenAI in each UCD phase

1) Understand and specify the context of use

Goal: know your users, tasks, environment, and constraints.

How AI helps:

- Usage & behavior analysis: log clustering, navigation analysis, AI-powered heatmaps (e.g., Hotjar with ML).

- NLP for interviews/surveys: auto-transcription, sentiment analysis, and topic extraction.

- Dynamic personas: generation and updates from GA4, Mixpanel, and CRM data.

Typical deliverables: stakeholder map, journey map, living personas, interview thematic summary.

2) Specify user and organizational requirements

Goal: translate needs into functional and non-functional requirements.

How AI helps:

- Automatic requirements extraction from interviews, tickets, forums.

- Risk/impact-based prioritization using historical data.

- User story & acceptance criteria generation via well-structured prompts.

Typical deliverables: prioritized backlog, value-vs-effort matrix, testable acceptance criteria.

3) Produce design solutions

Goal: create flows, wireframes, and prototypes.

How AI helps:

- Text-to-wireframe/UI (e.g., Figma + Magician, Uizard).

- Generative design: multiple layout variations based on heuristics and usage data.

- Prototyping assistants: suggest accessible components aligned with the design system.

Typical deliverables: annotated flows, accessible components, clickable prototypes.

4) Evaluate the design against requirements

Goal: verify usability and experience against defined criteria.

How AI helps:

- AI-assisted usability testing: simulate flows and detect friction points.

- Real-time feedback analysis: cluster comments/support tickets.

- Predictive eye-tracking: estimate attention patterns without in-person tests.

Typical deliverables: findings report, severity-ranked issues, remediation backlog.

Top risks—and how to mitigate them

1) Hallucinations (plausible but false content)

Risk: wrong product decisions, contaminated documentation.

Mitigate with: human-in-the-loop, verifiable sources (grounding on internal data), citation policies, systematic evaluation (accuracy, completeness, consistency).

2) Bias and unfairness

Risk: outputs that discriminate (e.g., gender, age, language).

Mitigate with: risk management and fairness guidelines (e.g., NIST references), bias testing, diverse samples, ethics reviews, and decision logs.

3) Privacy and data protection

Risk: interviews, recordings, and logs sent to models without a clear legal basis.

Mitigate with: minimization/anonymization, informed consent, DPIA where applicable, and alignment with authorities’ guidance (e.g., EDPB/ICO).

Good practices for responsible adoption

- Method before model: anchor AI usage in an explicit UCD process.

- Prompt catalog & versioning: standardize prompts; track variants and outcomes.

- Ground truth data: connect AI to reliable internal sources.

- Continuous evaluation: measure quality (accuracy, time, satisfaction, impact).

- AI governance: usage policies, ethics review, audit trail.

- Team enablement: build skills across AI, research, design, and product.

Start small (30-day plan)

- Week 1: pick 1 critical flow; define objectives/metrics.

- Week 2: use GenAI to analyze interviews and update personas.

- Week 3: generate wireframe variations; test with 5 users.

- Week 4: run feedback analysis, fix friction, publish learnings.

To wrap up

GenAI amplifies UCD when applied with method, trustworthy data, and governance. The path is to iterate responsibly—balancing speed with quality and ethics.

How are you using AI in your process? Which risks are you mitigating? Tell us in the comments—or reach out to GPTLab to co-create a controlled pilot for your product.

Note: this article references ISO 9241-210 (UCD) and widely recognized guidance on risk, bias, and privacy. Follow your organization’s official policies and local laws when implementing AI.