The Story: When a Chatbot Forgets Your Shopping List

It was Black Friday morning, and Alex was on his favorite electronics store’s website. He opened the store’s AI-powered chatbot and typed:

“I’m looking for a laptop under €1,000 that’s good for video editing.”

The chatbot replied instantly with three great options, all perfectly matching his needs. Feeling hopeful, Alex asked:

“Do any of these come in a lighter weight? I travel a lot.”

That’s when things went wrong. Instead of refining its list, the chatbot acted like Alex had just started a new conversation. It recommended a random mix of ultra-light laptops, some over €2,000, others great for gaming but terrible for editing, and a few underpowered for basic productivity. Alex sighed. The assistant had completely lost the plot.

The Real Problem: Context Loss

This isn’t about the AI misunderstanding “lighter weight.” The issue is that most AI assistants exist in a short-term memory bubble; they only use what’s spelled out in the current prompt and often forget earlier constraints.

That leads to:

- Context drift: the conversation veers off from original goals.

- Repetition: users re-explain their needs.

- Inconsistency: answers feel disconnected or random.

In Alex’s case, the assistant forgot his budget, use case (video editing), and the earlier suggestions, and started fresh.

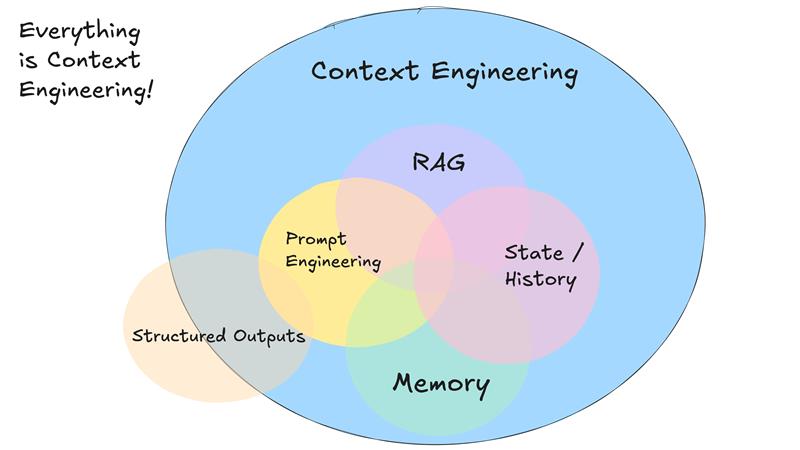

Formal Definition: What is Context Engineering?

Context Engineering is the practice of designing, building, and managing the full set of information an AI model needs to produce accurate, relevant, and consistent responses across one inference or many that fit in the model’s context window.

It’s more than crafting a prompt. It’s about:

- Storing user preferences and conversation history.

- Retrieving external data smartly.

- Structuring context for clarity and efficiency.

Think of context engineering as the stage crew ensuring the AI (the actor) always has the right script, props, and cues, no matter how long the act runs.

How Context Engineering Saves the Day

Imagine Alex’s e-commerce chatbot was built with Context Engineering:

- Memory retention: it stores the original request (budget + editing requirement).

- Constraint chaining: it understands “lighter weight” is a filter, not a new search.

- Smart retrieval: it only fetches laptops matching all constraints (price, editing power, portability).

- Context packaging: it bundles all relevant info into a single coherent prompt.

Alex would have gotten:

“Here are your updated options: all under €1,000, excellent for video editing, and under 1.2 kg — perfect for travel.”

He feels heard, and the store likely earns the sale.

Prompt Engineering vs. Context Engineering:

Prompt Engineering: Crafting concise instructions per task. Focused on how you ask. Great for quick, single-turn experiments.

Context Engineering: Building systems that ensure the AI has all the needed background before responding. Focused on what the AI knows when it answers. Essential for multi-turn, dynamic systems in production.

Where Context Engineering Truly Shines

- E-commerce assistants: Track evolving user criteria like budgets, styles, and preferences.

- Customer support bots: Recall past tickets, user accounts, and resolutions.

- Enterprise AI systems: Combine CRM data, documents, and chat transcripts into unified answers.

- Specialized tools: Legal or medical assistants that juggle constraints across multiple facts.

- Agent workflows: Multi-step systems handling structured context between sub-agents.

Limitations and Challenges

While context engineering is powerful, it comes with notable challenges:

- Overfilling the Context Window: Dumping every possible detail into the model’s context window can overwhelm it, leading to irrelevant outputs or loss of focus. Models have finite context lengths, and exceeding them forces truncation, which can cut out important details.

- Context Poisoning: If incorrect or misleading data enters the context, the model may repeatedly build on that false premise.

- Context Distraction: Including too much irrelevant data can pull the AI away from the main task.

- Context Clash: When new information contradicts earlier content, the model may produce inconsistent answers.

- Engineering Complexity: Building retrieval, summarization, and filtering systems requires careful design and ongoing maintenance.

- Security Risks: Injecting external data into context windows can open the door to prompt injection attacks or unintentional leakage of sensitive information.

These challenges mean that successful context engineering isn’t about “stuffing” the context window; it’s about curating it. Effective systems filter, summarize, and validate the most relevant pieces of information before presenting them to the model.

Conclusion

Without context engineering, your AI is like a sales clerk who forgets your request the moment they look away. With context engineering, AI becomes someone who remembers, anticipates your needs, and delivers every time, whether you’re shopping, troubleshooting, or researching. Simply, we can summarize that prompt engineering helps you speak to AI, and Context engineering helps AI truly understand and remember you.

Read more:

- https://www.datacamp.com/blog/context-engineering

- https://www.dbreunig.com/2025/06/22/how-contexts-fail-and-how-to-fix-them.html

- https://blog.langchain.com/the-rise-of-context-engineering/

- A Survey of Context Engineering for Large Language Models

- https://www.codecademy.com/article/context-engineering-in-ai?utm_source=chatgpt.com

- https://medium.com/@tam.tamanna18/understanding-context-engineering-c7bfeeb41889